TL;DR — I promise my work is my work in The Curse of the Unholy Grail. Virtually no text from generative AI will appear in what I write, except the occasional molecule in the organism. But AI can be directed helpfully, … it’s like fire that way.

Clever Friends

Writers wouldn’t balk at asking a writer buddy “Aigh, I’ve painted myself into a corner: The aliens have my heroine, yet the MacGuffin fell out at the Toyota dealership! How do I resolve this?” Of course, your buddy’s going to suggest some ideas, recall some bits of lore, allude to something similar in other literature, … or advise a re-write. As synergy builds, they might spout some pithy dialog or a witty description, spurring your imagination.

Of expert friends, you might ask “Did the Aztecs have doppelganger monsters?” or “Could a corsage of flowers impart enough heart-stopping poison to kill everyone at the punch-bowl?” or “How many people a year wake up on the autopsy table?”

Your beta-reader buddy may give feedback on plot-structure, pacing, character relatability, etc. (Though you probably already used tools for spelling- and grammar-checking, like verb-agreement, sentence-fragment detection, etc.)

One thing, however, you would not ask of, nor accept from, your clever friend is him handing-over entire scenes to copy-‘n-paste in your book. No, you would not! It’s plagiarism. It’s intellectual abdication. It’s breach of reader faith. It’s atrophy of your creative muscle. It’s hollowing out of fulfillment and pride. (Or is so on those artifacts where we innately know “authenticity” matters.)

Okay, now imagine you don’t have a dozen friends who are writing coaches, forensic pathologists, ancient Egyptian religious scholars, tropical-disease epidemiologists, or even anal-retentive grammarians. But you do have ChatGPT Plus. Should you talk to it?

Layers of Ethics

Philosopher Immanual Kant had a good ethical litmus test: What if everyone did thus?

On a superficial level, then: What if everyone could select and summarize a stack of library books at midnight? Could bounce ideas around without judgment? Could brainstorm solutions (or complications) with someone who will outlast whatever level of caffeine you can safely ingest? Well, people could speed their work. People could see quality improvements (over having never gotten feedback, … but folks still have to watch out not to misinterpret or mindlessly apply it). People could have more egalitarian achievement (where formerly it took wealth or society-connections to unlock helpful resources).

So far, so good. Demand-side, at least.

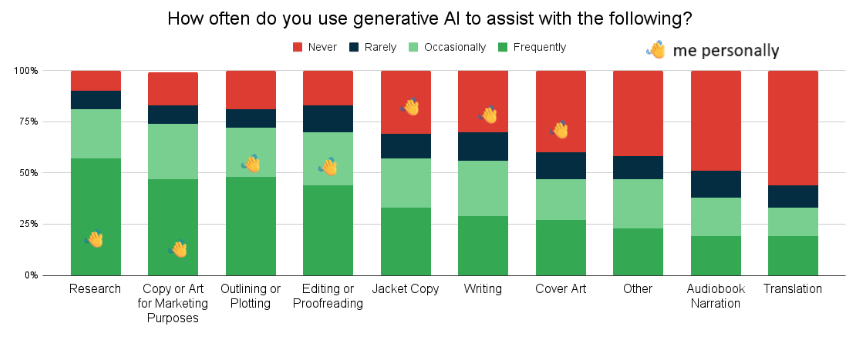

Writers appear to agree, according to this BookBub AI use survey (overlaid with a waving-hand how I’d have answered). BuildBookBuzz’s article Using AI as your authoring assistant suggests yet more uses.

AI Ethics and the Supply-Side

Fine, but what if everyone had no more need for various book-related professional services? Developmental Editors, Proofreaders, Cover-Designers. Or — God forbid! — Urban Fantasy authors themselves! 😟

What I can and can’t control, in any practical sense, dictates my guilt. I’m not sure boycotting ChatGPT will put that genie back in its bottle and save entire industries for all eternity.

But AI used as an adjunct, like on a pre-engagement pass prior to hiring flesh-and-blood professional-services, I hope that … well, wraps a cushing layer of Kleenex around the brick that Generative AI’s chucking at creatives’ livelihoods.

I’m fortunate enough to be able to afford a Developmental Editor and a Cover-Designer. Human ones! To me, my book will be more authentic, be more supportive of the writing-community for it. (Might I have the same will, or wherewithal, a decade hence, that’s less certain.)

In my mind, I’ve freed my editor to concentrate of substantive issues by first using software/AI tools to remove simple, egregious issues. (ProWritingAid, Grammarly, etc. feel less controversial to talk about in parties, yet they use LLMs more and more. But the guilty secret from the BookBub survey above is we authors overwhelmingly use ChatGPT. I do.)

Did AI Steal its abilities?

Going deeper, AI trains on data that it may not have derivative-lisence for at scale, i.e. many fear AI plunders like a pirate. One does not say this of the English professor who paid once for his Ph.D. and who uses libraries and second-hand shops for content he might use to educate students for years. But lots of people say Google is damaging journalism. Not saying there is no level of gray close enough to call black. Only the nuances will require years to yield good policies (assuming politics admits good policies).

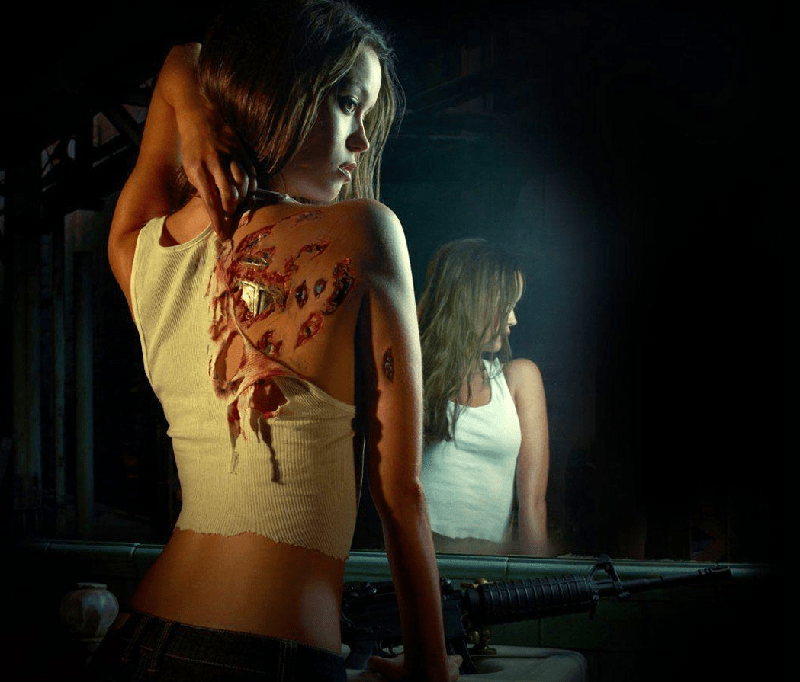

I’m happy if AI trains itself to improve its sense of style from my fiction (spoken like a paragon of humility, I know). Or happy until it writes better than I do, … and so quickly and cheaply that certain immoral humans sic that trained-on-my-œuvre terminatrix on my book sales.

In several domains, walled access to information had propped-up middle-man inefficiencies and/or had compromised informed decision-making. Opening the data — stealing the secrets, the abilities of the gatekeepers — arguably made the world better. Zillow, some claim, brought transparency to real-estate this way, improving the industry. And if AI is reading more medical-journal articles than doctors, applying the knowledge without bias or fatigue, reducing the need for radiologists, say? Still, what weight should an indie, debut author place on that implication as he fires-up his writing style-checker?

Confessions

Okay, okay, my author web-site has several OpenAI Sora images. Some of them are roughs/gists, I then gave to the Cover-Designer. But some I made just because I can’t afford to commission every little character thumbnail or whatever, and Pexels only has so much. Thus, unless my subscribers are happy seeing Unholy Grail character pics from the Skyrim character editor, Sora it is. Where authenticity matters, to me, is the cover-art itself.

While I’m unburdening my soul, true, conversing with ChatGPT, it may cough up prose. Sometimes, wicked good prose. For example, chatting about the moral high-ground of two arguing characters over does a vampire’s intrinsic evil negate any good result they do, yielded not just dry, philosophical positions, but sample dialog. One being: “What if Heaven is fresh out of saints?” Bam! That’s just what Nikki would say! I had to put that in the book (and it became my author web-site tagline). Another example, I needed justification why some characters experience what others don’t, and ChatGPT suggested “Demons want fresh souls, not ones that already scream at night.” Damn. Punchy, pithy, what’s not to love? Maybe I tweaked it, I forget, to soothe my conscience (like all authors do when they ‘appropriate’ some Virginia Woolf or Mark Twain witticism).

Virtually no AI-gen text, I said. Like 0.03%. Much less than the 0.08% blood-alcohol limit in Washington State, so … not irresponsible levels of AI intoxicants.

Decision-points, though, AI’s helped more. It was ChatGPT that suggested my allusion to Virgil from Dante’s Inferno was less a match for Nikki’s post-supernatural-ordeal mindset than, say, Aeneas from (Virgil’s, ironically) Aenid. Should I have actually sought a human sounding-board for that decision? Seriously?

And the Almost-Sin

As I said, I wanted a Developmental Editor (DE). Given a STEM-heavy education and career, I just want a pro set of eyes for my debut novel … even at the cost of a couple thousand dollars. But I didn’t want him wasting time on low-hanging fruit, hence let’s do a pass through ChatGPT first. Sadly, I hated the GPT-4o attempt.

Drop in one scene at a time, it gave some good advice. Drop in multiple chapters and ask for a corpus-wide review of theme development, imagery repetition, magic-system consistency/duplicated-description, rise-and-fall of reader/character emotion, etc., and ChatGPT gibbered horribly, offering clueless corrections.

I’d challenge “how’d you figure X, given context Y?” and it would sheepishly repent and adjust its rule-logic. Every adjustment caused collateral damage to other rules. Eventually, in a fugue state, conversations would crash. Updated files would be ignored. One time, every uploaded file simply disappeared! Vindicates my decision to hire a DE (yet if the ChatGPT experience wasn’t so disastrous, …? This might be called an Easy-Virtue decision).

Conclusion

There you have it. I have used a robot as a clever friend. My conscience is clear — yet can’t seem to put the issue to bed. Feels like other aspects of modern life this way: fast-fashion, our meat-industry conditions, picking air-travel vacations, yada yada. But I feel I’m using this power responsibly. I hope others choose to as well.

Leave a comment